Last Updated on March 12, 2021 by Editor Futurescope

Okay, this may sound like a trip to the Skynet engineer’s desk that creates, in fiction, the electronic brain that will soon become independent of human civilization and bring its doom. Only the news is not fiction and, within the large billboard of techno headlines, could easily go unnoticed, given its remarkable technical complexity. But we would lose, in that case, a key sign of the times. In a nutshell, Google unveiled a paper in which it presents, for the first time, its advances in the development of TPU chips specialized in deep automated learning (neural networks, artificial intelligence, voice and image recognition, autonomous cars, and so on) . The work will appear at the International Symposium on Computer Architecture in Toronto on June 26 and bears the obscure title of “Data Center Performance Analysis of a TensorFlow Processing Unit.” Wow.

Its automatic learning chips are between 15 and 30 times faster than conventional processors, such as the one in your notebook (called CPU) and graphics processors (those who create On-screen images, GPUs). Such chips, known generically as ASIC (application specific integrated unit), were tested against Nvidia’s Tesla K80 GPUs and Intel’s Haswell CPUs.

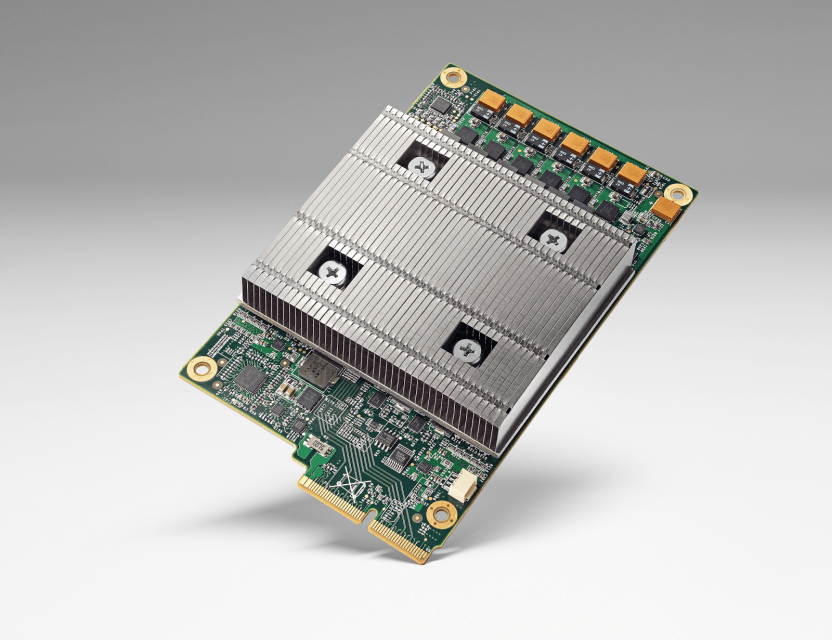

Google calls its TPU chips (Tensor Processing Unit) because they are designed for a company-developed automatic learning technology known as TensorFlow, and so far little and nothing has been known about these processors. The paper yesterday reveals that the TPUs perform functions in the second of the two stages of the automatic learning cycle.

The new Google TPU

TPU stands for Tensor Processing Units, the Processing Units that Google uses to perform tasks related to machine learning, including training and inference.

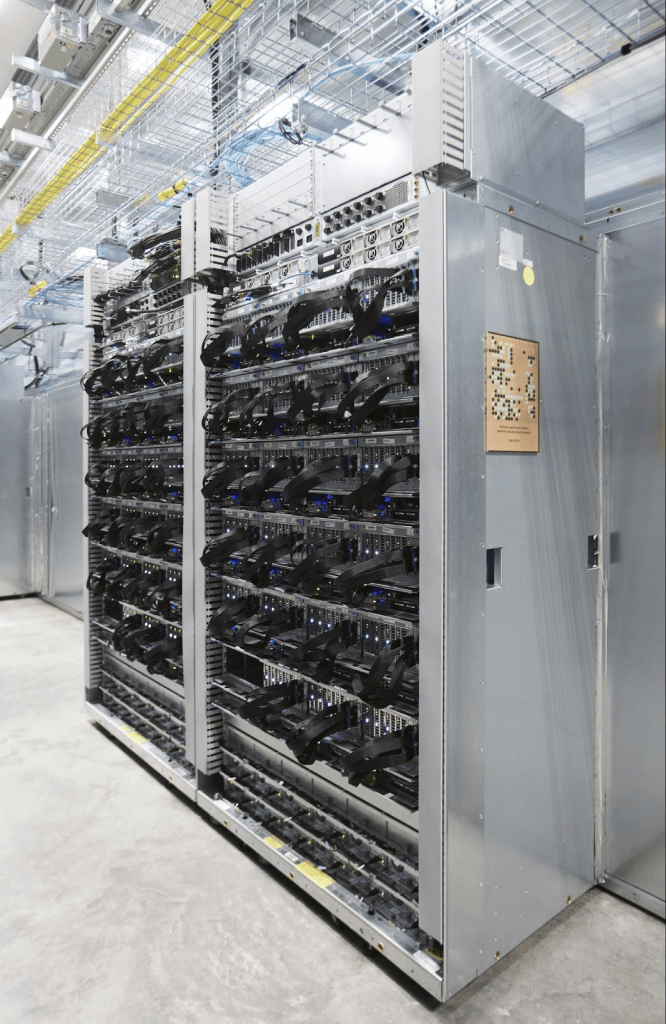

The use of TPU is currently present in more than 100 products in the company, from StreetView, stand-alone cars, voice search, to the automatic response of Inbox, something that started as a mere experiment is today the great project of Google, That along with its developments in software have achieved a rapid advance that would represent seven years of work, that is to say, three generations of the Law of Moore, according to Google.

Google has shown an impressive breakthrough in this sector over the last few years. Thanks to the neural networks we have advanced in several points:

– Google translator: the quality of translations has improved greatly thanks to the training received with millions of phrases in dozens of languages.

– Google Search: The search engine has become smarter, able to detect the content of better quality and eliminate spam and duplicate content and copied from other sources.

– Google Photos: It is able to identify objects and faces, grouping content according to what is in each image.

– Games: DeepMind’s AlphaGo program has been able to defeat Lee Sedol, one of the best players in the world of Go, making it clear that artificial intelligence is becoming less predictable.

In order to carry out this work, it is necessary to invest in enormous amounts of computation, both to train the learning models and to execute them once trained, and for this they have designed, constructed and deployed a family of units of processing of tensores, or TPUs, that support Increasing amounts of machine learning computation, first internally and now externally.

The first TPU they introduced was designed to run automatic learning models quickly and efficiently, such as translating a set of phrases or choosing the next step in go, but the new system is able to eliminate bottlenecks and maximize overall performance, with the possibility of performing several tasks at the same time.

These new TPUs now come to Cloud TPUs as Google Compute Engine, and can connect to all kinds of virtual machines and mix with other types of hardware, including Skylake CPUs and NVIDIA GPUs. We can program these TPUs with TensorFlow, the most popular open machine learning framework in GitHub, and are introducing high level APIs to help anyone use them.

The goal is to provide the world with an accelerated on-demand computing power, with no upfront capital costs, helping different companies build the best machine learning systems using the power of Google’s Cloud TPU.

Those interested in the topic can register on this page, informing the characteristics of their system to find the ideal solution.

Learn in two steps

Roughly, for a computer to learn to do something first you have to train it; Are used for this, typically GPU, because the process uses floating-point arithmetic, and the graphics processors are excellent at that. The second phase is known as inference (or prediction) and uses 8-bit integers. That’s where the TPU, Google claims, make the difference.

The reason why would lead us to technical details that certainly exceed (and by much) the horizon of these lines. But the PDF cited above abounds in information that the curious reader will find delicious. For example, one of the reasons why TPUs are faster than GPUs – and a lower energy cost, which is key in a data center – is that they have 25 times more MAC (multiplier-accumulator) units and 3, 5 times more internal memory than a K80. In numbers, the chip’s core is a 256 x 256 (65,536) 8-bit multiplier array unit, and has 28 mebibytes (a little more than 29 megabytes) of internal memory.

The 17-page paper could be seen as another cryptic bookmark that is best left behind. But its meaning is deep beyond engineering. Or precisely because of its engineering. Thanks to TensorFlow Google managed a computer to win the Go to the world champions of this complex and millennial board game. At the same time, it demonstrates, as Apple and other manufacturers are already doing, that dedicated electronic brains are setting a strong, perhaps definitive, trend in the silicon industry.

Google talks about its processing units for artificial intelligence and computer learning, which will not sell, at the moment, to third companies.

The great titans of the hardware industry are developing their own processors with algorithms and special technologies to develop systems of artificial intelligence and machine learning. Google is one of these companies that works hard on this issue, as they consider it a very large and important world to explore. The first prototype was introduced last year, at the Google I / O developer event. It received the name of Tensor Processing Unit or TPU, although after that day we have not heard anything from this project.